The Importance of the Robots.txt File for SEO Positioning in WordPress

In an era where digital presence is synonymous with success, the robots.txt file diventa un attore fondamentale nella strategia SEO di qualsiasi sito, in particolare per chi si affida a WordPress. Nel 2024, ottimizzare questo file non è solo consigliato, ma necessario. Scopriamo insieme come una configurazione mirata del robots.txt può trasformare il posizionamento del tuo sito.

What is the Robots.txt File and Its Crucial Relevance

Before diving into optimisation techniques, it is important to understand what the robots.txt file is and why it plays such a critical role. This text file acts as a gatekeeper for search engines, telling them which parts of your site are accessible and which are not. It is, in fact, the first point of contact between your site and search engine crawlers.

Proper configuration of the robots.txt file can revolutionising SEO of your website. This is because it prevents search engines from wasting time and resources by indexing pages that do not bring value, such as private or less relevant pages. By focusing the attention of the crawlers on actually meaningful content, you greatly improve the visibility and relevance of your site in search results.

Ultimately, the robots.txt file is not just a simple text file, but a powerful SEO optimisation tool. Its correct configuration can make all the difference in the positioning of your WordPress site in the vast and competitive digital landscape of 2024.

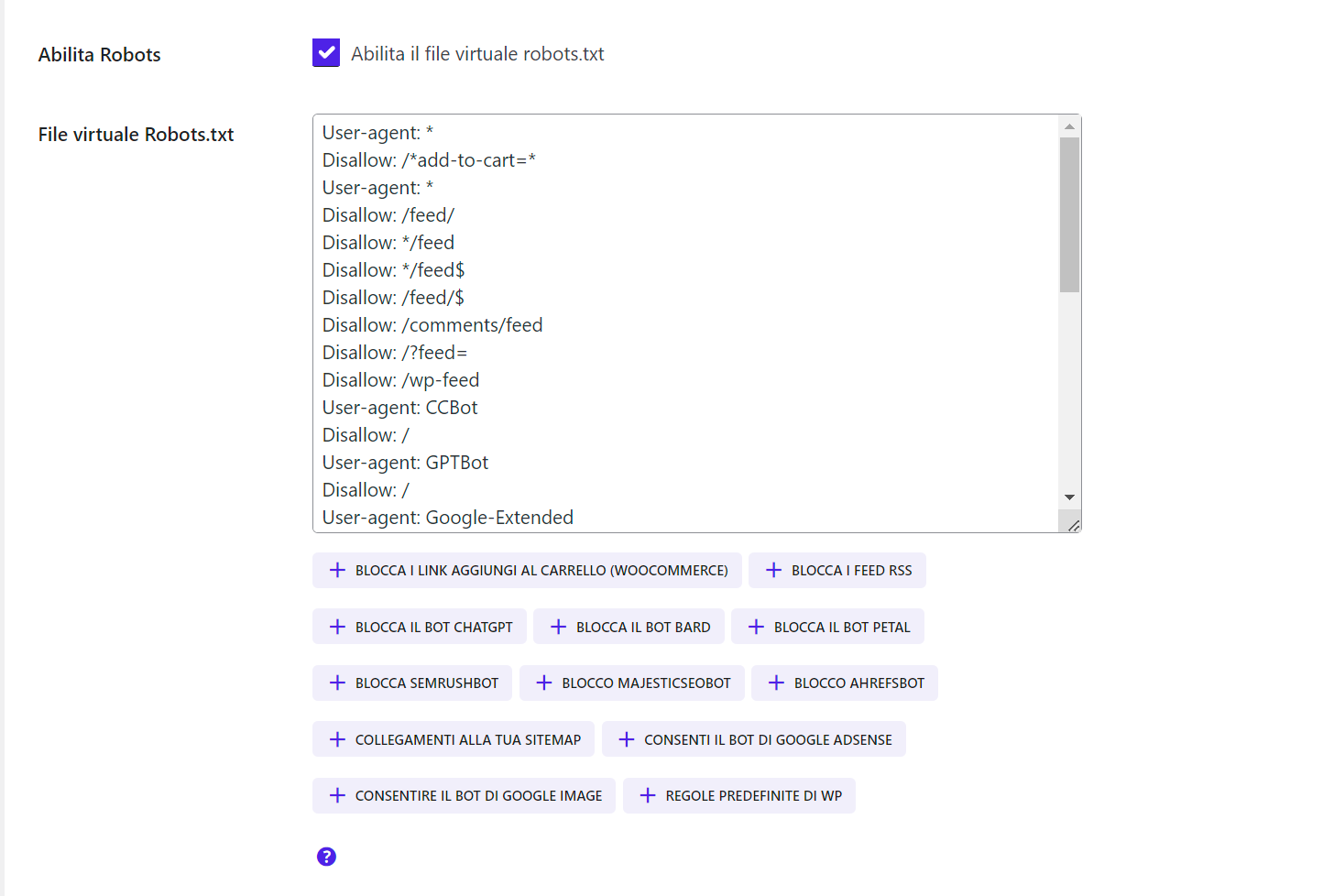

Analysis of a Specific Configuration

Let us look at a specific configuration for WordPress in 2024:

User-agent: *

Disallow: /*add-to-cart=*

User-agent: *

Disallow: /feed/

Disallow: */feed

Disallow: */feed$

Disallow: /feed/$

Disallow: /comments/feed

Disallow: /?feed=

Disallow: /wp-feed

In this configuration, User-agent: * indicates that the following guidelines are intended for all search engines. With Disallow: /add-to-cart=, we are specifying not to index all URLs that include "add-to-cart". This is crucial for preventing the indexing of shopping cart pageswhich generally do not add SEO value. Similarly, with Disallow: /feed/ and similar variations, the indexation of different forms of feed. These feeds may include comment feeds and other types of dynamic feeds, which are often not relevant for SEO.

User-agent: CCBot

Disallow: /

In this section, we are specifying that the CCBotspecific type of crawler, it must not index no part of the site. This measure can be taken for various crawlers for reasons such as reducing the load on the server or to avoid indexing by bots that might not be useful or even harmful.

Sitemap: https://iltuodominio.estensione/sitemaps.xml

Include the route of the your sitemap is essential. It makes it easier for search engines to find and index your pages efficiently, ensuring that relevant content is easily accessible.

This specific configuration of the robots.txt file for WordPress is an effective way to driving search engines to the content you really want to be indexed, thus improving the visibility and SEO effectiveness of your site.

Final Considerations

This example of configuring the robots.txt file for WordPress is only a starting point. Each site has unique requirements, so it is important to customise the configuration to the specific needs of your site.

Una configurazione attenta del file robots.txt può portare a miglioramenti significativi nella visibilità online e nella SEO. Tuttavia, è fondamentale procedere con cautela: un errore nel file robots.txt può impedire ai motori di ricerca di accedere a parti importanti del tuo sito, danneggiando la tua presenza online.

Always remember to check your robots.txt configuration with reliable online tools (such as Google Search Console) to make sure it is set up correctly and does not hinder your SEO strategy.

In conclusion, the robots.txt file is a powerful tool in every webmaster's toolbox. With the right configuration, it can elevate your SEO strategy and significantly improve the visibility of your WordPress site in 2024.